Zero-trust network with Consul Connect & Docker

TL;DR Docker namespacing capabilities can be used to enforce service-to-service zero-trust network with Consul Connect. To do so, you simply have to override a few default settings of the Consul Agent and the app’s proxy so they’re able to talk to each other and use Docker’s “container” network to allow the app and its proxy to chat together through the shared loopback interface of their own private namespace.

First attempt

There’s not much documentation about how you’re supposed to run Consul Connect with Docker containers. The official documentation advises to put all your containers in the Docker’s “host” network so they bind directly to the host’s interfaces without any namespacing. This actually works well.

Let’s take the example of 2 services definitions (say, caller and echo), each one running on its own VM.

service {

name = "caller"

port = 9000

connect {

sidecar_service {

proxy {

upstreams = [

{

destination_name = "echo"

local_bind_port = 12345

}

]

}

}

}

}

service {

name = "echo"

port = 5678

connect {

sidecar_service {}

}

}

What it means is that if caller sends traffic to the port 12345 of the interface caller_proxy binds to, it will be routed to echo_proxy that will forward it to echo on port 5678.

Just a small network isolation problem…

Wait… how does caller_proxy knows the traffic actually come from caller ?

… It doesn’t ! Everyone able to reach it on the right port will be considered as being caller itself.

Ok so if a container on the same host is compromised, nothing will prevent an attacker to send traffic to container’s loopback on the right port and use caller as its next hop in our network… ( since we used Docker “host” network mode, every containers share the same interfaces )

It’s super easy to give a try : run an attacker container (just choose any image you want) on the host network and send traffic to loopback.

Ex:

sudo docker run --network=host busybox wget -qO- http://localhost:12345

You should get a response from echo.

It’s not a design flaw, if you read carefully Hashicorp’s documentation, it’s written (maybe not super obviously, but it’s there)

So our “zero-trust-network” is not service-to-service but IP-to-IP which may not be what you desire.

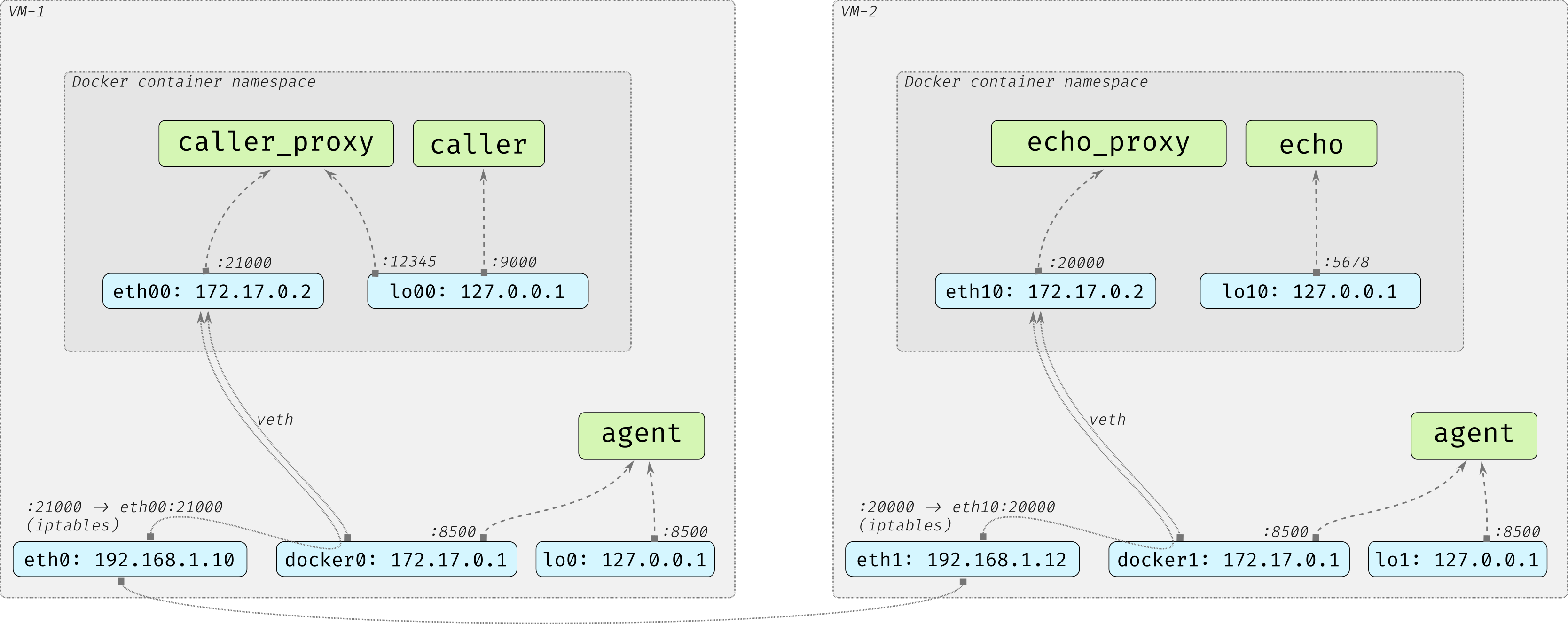

Just a quick recap about how everything works here, from a 10.000 feet

Before we dive deep in a configuration allowing us to enforce network isolation between containers on the same host, let’s just see how traffic flows with Consul Connect.

First, there’re not 4 elements directly at play here in our little request but 6 :

- on VM-1 :

caller,caller_proxy,vm1_agent - on VM-2 :

echo,echo_proxy,vm2_agent

(I don’t count the consul servers.)

Here’s the sequence for a request from caller to echo (according to services definitions above) :

-

on VM-1

- caller sends traffic to caller_proxy on port 12345

- caller_proxy reaches vm1_agent on port 8500

- vm1_agent does it’s service discovery job and returns both VM-2 IP & echo_proxy “secure” port

- caller_proxy forwards the request there

-

on VM-2

- echo_proxy receives the request

- echo_proxy calls vm2_agent on port 8500 to know if the request is allowed

- vm2_client responds ok

- echo_proxy sends traffic to echo on port 5678

Proxies are clearly the central piece with Connect : they must be able to talk with their app (echo or caller in our example), a consul agent and another proxy on the destination host.

A more secure approach

Ok, here’s a simple solution to improve the security and avoid “east-west” traffic between containers running on the same host. Since the setup is the same on both sides, I’ll say “the app” for both apps, “the proxy” for their respective proxy and “the agent” for their respective Consul agent running in client mode.

Before describing how things are configure, here’s how they will be run :

- the agent : a process on the host

- the app : a docker container

- the proxy : a docker container

Here’s what we’ll build :

1 - Allow docker containers to send traffic to the Consul client

By default, Consul agents listen to traffic on the loopback interface, but it can be overriden : we add the docker bridge IP address (here 172.17.0.1)

On both agents configs :

addresses {

http = "127.0.0.1 172.17.0.1"

}

The result is that all the traffic sent to the host’s loopback or the Docker bridge on the port 8500 will be received by the Consul agent

2 - Fix the port the proxy listens authenticated traffic from

By default, proxies ports are dynamically assigned but it can be overriden. We update the caller service definition above and specify a port, say 21000. It will be useful at the next step

service {

name = "caller"

port = 9000

connect {

sidecar_service {

proxy {

upstreams = [

{

destination_name = "echo"

local_bind_port = 12345

}

]

}

port = 21000

}

}

}

services = {

name = "echo"

port = 5678

connect = {

sidecar_service = {

checks = [

{

Name = "Connect Sidecar Listening"

alias_service = "echo"

}

]

port = 20000

}

}

}

3 - Start the caller_proxy container in the default bridge network

The proxy is started in a normal “bridge” network and exposes on the host its “secure” port.

sudo docker run --rm --name caller_proxy -p 21000:21000 consul connect proxy --sidecar-for caller --http-addr 172.17.0.1:8500

sudo docker run --rm --name echo_proxy -p 20000:20000 consul connect proxy --sidecar-for echo --http-addr 172.17.0.1:8500

It can now receive traffic from the outside.

Notice the http-addr flag, it’s used to override the IP where the proxy attempts to reach the Consul agent. It’s the IP we added to the agent definition at step 1

4 - Start the app as a sidecar of the proxy

The proxy binds its “unsecure” port to the loopback interface so the app must have the same loopback in order to send it traffic. It can be done easily by starting the app with the Docker “container” network mode :

sudo docker run --rm --name caller --network=container:caller_proxy amouat/network-utils python -m SimpleHTTPServer 9000

sudo docker run --rm --name echo --network=container:echo_proxy hashicorp/http-echo -text="hi from echo server"

Don’t bother with the python http server, it’s just to listen to traffic on the port declared in the caller’s service definition.

5 - Conclusion

Just log in the caller container and do a simple

curl localhost:12345

You’ll get a response from echo !

Here’s what we have now :

- the agent listen’s to traffic on the docker bridge so the proxy can talk to it through this interface.

- the proxy exposes its “secure” port on the host so it can receive traffic from the outside

- the app is the single one to have the same loopback as the proxy so no one else can use the proxy’s “unsecure” port.

NB : we could have start the app first, exposing the proxy’s secure port and then start the proxy as a sidecar of the app, it would have work too but it feels less natural to me.

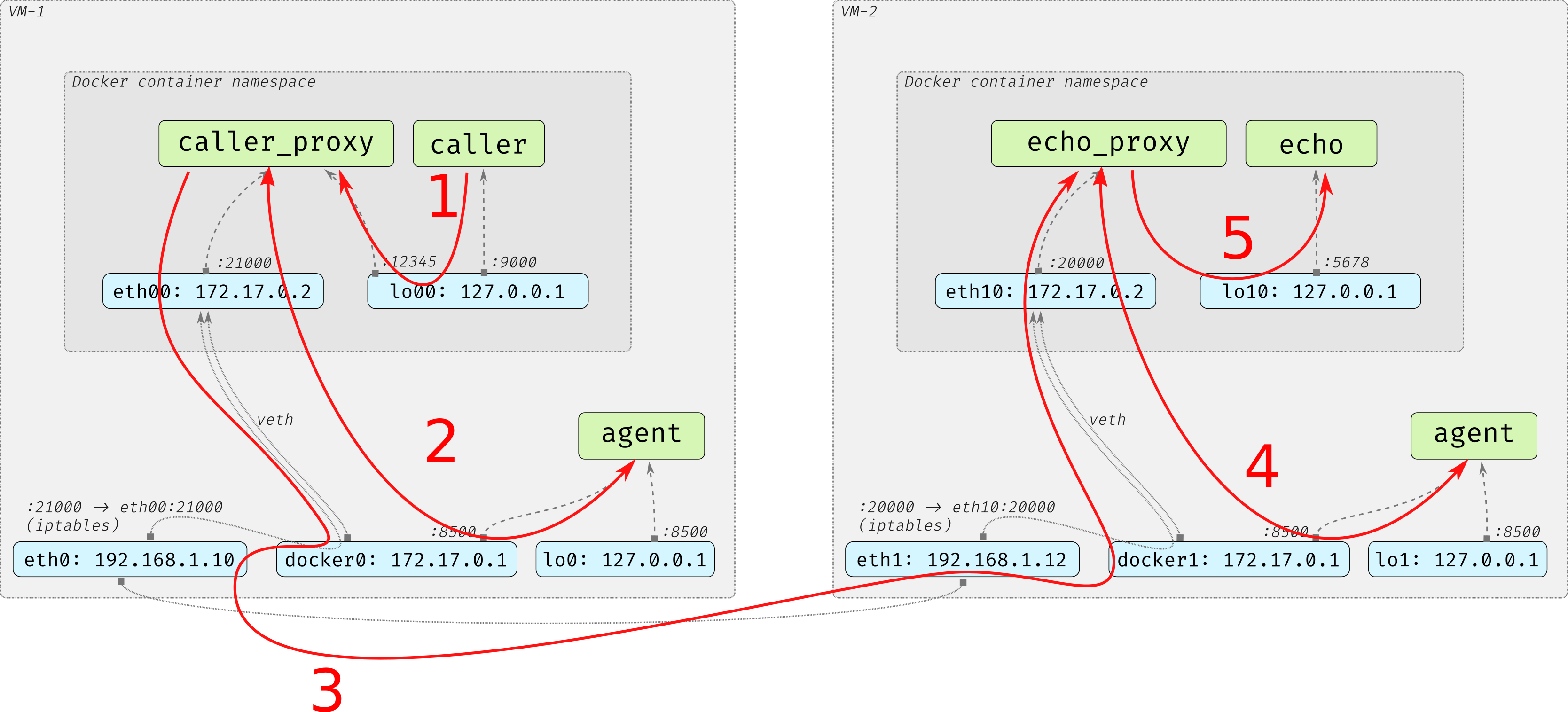

6 - BONUS : A request’s journey

Let’s just remove the magic and describe the steps involved in order to allow caller to reach echo by sending a request to its own loopback interface !

Step 1 : caller to caller_proxy

caller sends the request to 127.0.0.1:12345

- since

caller_proxyis listening there, it gets directly the request.

Step 2 : caller_proxy to agent

caller_proxy sends the request to 172.17.0.1:8500

- the routing table is applied, the gateway for this IP is

eth00 eth00is not the destination so it forwards the packets to its gateway :docker0agentis listening todocker0:8500: it gets the request and replies

Step 3 : caller_proxy to echo_proxy

caller_proxy sends the request to 192.168.1.12:20000

- it starts like the previous step :

- the routing table is applied, the gateway for this IP is

eth00 eth00is not the destination so it forwards the packets to its gateway :docker0- docker0 is not the destination, the host routing table is applied, the gateway for this IP is

eth0 eth0is not the destination, say this IP is in the ARP table : the request is sent directly sent to it on port 20000- on VM2, the iptable rule created by Docker matches the request and masquerades its dest IP to

172.17.0.2keeping the port 20000,docker1is the gateway for this IP docker1is not the destination so it forwards the request toeth10:20000echo_proxyis listening toeth10:20000so it receives the request

Step 4 : echo_proxy to agent

echo_proxy sends the request to 172.17.0.1:8500 (same as step 2)

- the routing table is applied, the gateway for this IP is

eth10 eth10is not the final destination so it forwards the packets to its gateway :docker1agentis listening todocker1:8500: it gets the request and replies

Step 5 : echo_proxy to echo

echo_proxy sends the request on lo10:5678

- since

echois listening there, it receives the request. DONE !